We need all our disciplines in order to understand this. - Matt Chisholm, Cantina Co-Founder, CEO

To build a sturdy foundation for the next decade, Cantina’s Artificial Intelligence working group analyzed the multifaceted world of AI, and outlined a general approach to it.

Key Terms

The meaning of artificial intelligence (AI) is precisely the sum of its two parts. Something artificial is of unnatural origin, while intelligence is the ability to acquire, retain, and apply information. Thus, the complete definition of artificial intelligence is the unnaturally occurring ability to acquire, retain, and apply information.

Often confused with artificial intelligence, machine learning (ML) is a process by which the behavior of AI changes over time. ML can also refer to the field of study that examines this process.

Problem Analysis

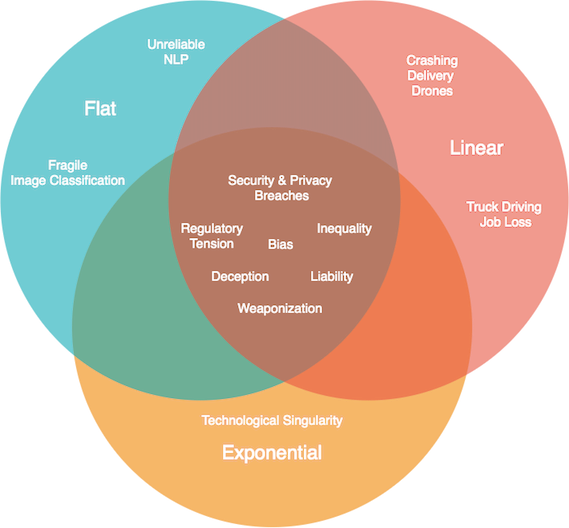

The typical problems primarily associated with AI are classified as either use or systemic.

A use problem arises when AI applications are abused or falsely promised. Controversy over federally enforced facial recognition is an example of a use problem. How to recognize AI snake oil details how the technology is used for misleading investors and consumers.

On the other hand, poor user experience with voice assistants and chatbots is a systemic problem (as was documented in our previous post that reviews voice assistant developer platforms). In such cases, multiple aspects of the system other than the central learning and inference algorithms create a negative impact. To worsen the matter, “AI” in the proverbial sense, might be used as a scapegoat or to blur the underlying weaknesses.

These two classifications generally persist even as the ability of AI increases. Over time, more powerful AI could be exploited to worse extremes and raise the stakes for swindlers – perpetuating use problems. Higher intelligence can be hindered by faults in its dependencies – continuing systemic problems.

Currently, the precise degree to which raw AI capability will increase in the next century, for example, is unknown. However, attempting to predict the rate of advancement is unnecessary from the perspective of our analysis. The diagram below supports this by showing that many significant use and systemic problems persist into the future regardless of how rapidly AI advances going forward.

Nevertheless, we do believe AI will continue to both exist and advance to some degree, barring any apocalypse, global loss of electricity, or mass information purge by governments.

In short, many of the problems and shortcomings affiliated with AI lie not in the technology itself, but in how it is implemented and managed. Use issues are addressed through organizational policy, education, and regulation. Systemic issues are solved via improved strategy, design, and development practices concerning all system components.

Our Approach

While an agency’s role is clearly not to enforce use solutions, we will continue to advise clients on best practices and remain a thoughtful voice in the community.

On the other front, we are particularly equipped for delivering systemic solutions. Cantina has over a decade of experience working with this technology for Fortune 500 companies and high-growth startups. As just a few examples, we have strategized, designed and built the following for our clients:

- A suite of big data analytics applications that aggregate cancer patient treatment data to help tailor therapy to individuals.

- An application to harness large data sets to help portfolio managers efficiently value, manage, grow and sell assets.

- A personal finance chatbot.

- A voice user interface to seamlessly search through investment options.

The standards that guide our work are tested, refined, and require minimal adjustment per the core technology. All we need to do is calibrate our proven process to the nuances of AI.

Baseline

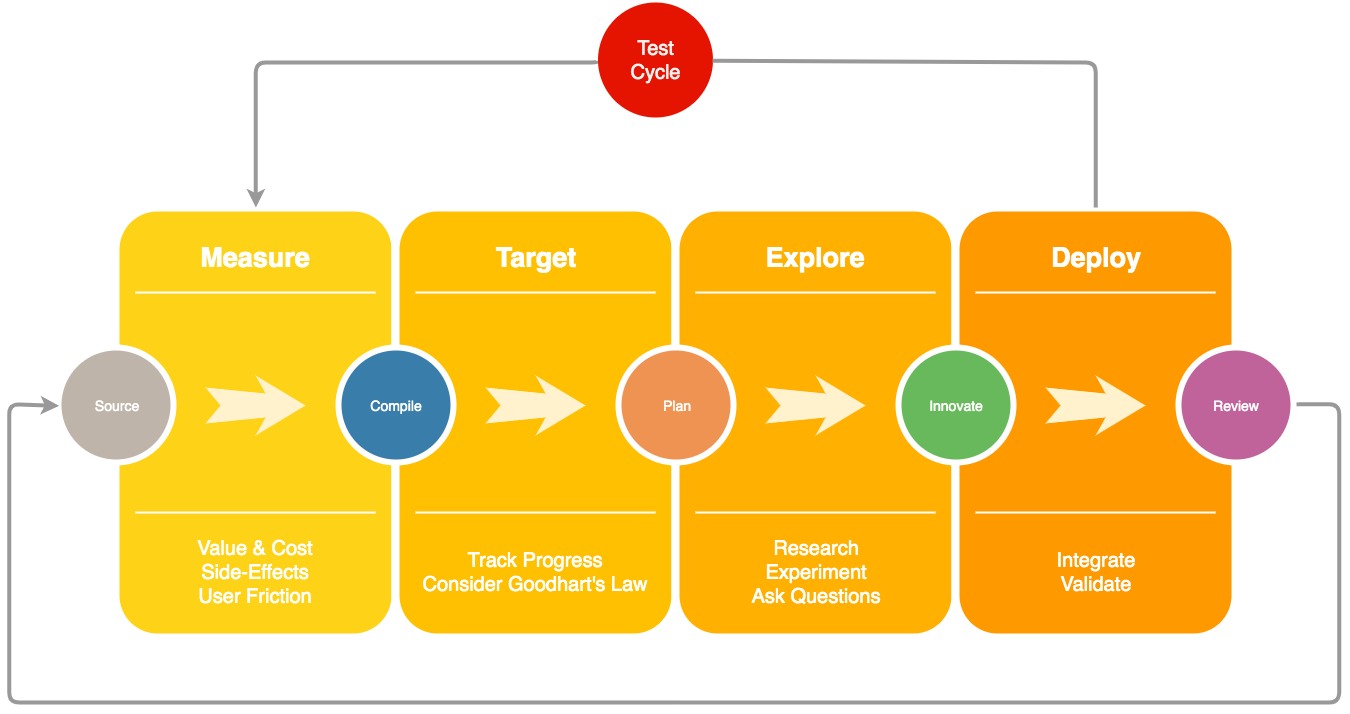

Within this idealized framework, a team conducts its work in four phases of iteration – measure, target, explore, deploy.

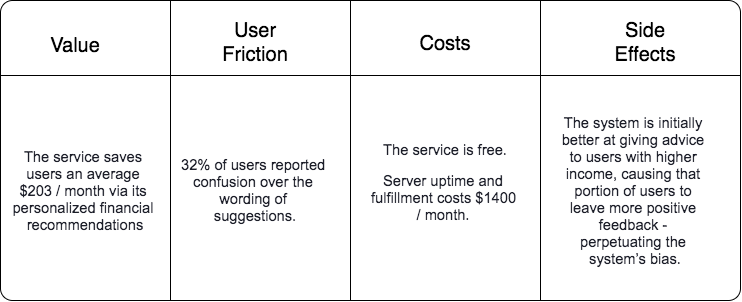

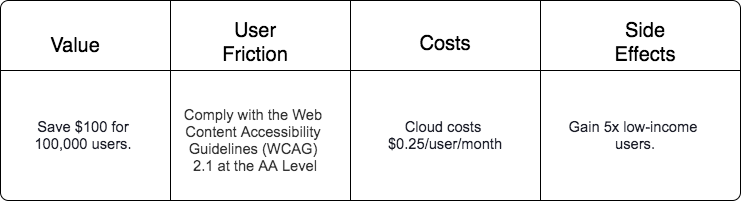

First, a team measures quantitative and qualitative metrics that estimate value, user friction, costs, and side-effects. This is done to gain an accurate view of reality across stakeholders. Value is subjective of course, but, in general we quantify it as a combination of user count and the product’s importance to users. Friction occurs whenever the user experience is hindered or access to a product is limited. Costs are the resources forfeited by any stakeholder in exchange for the product. Types of costs include financial, environmental, time, and social. A side-effect is an indirect result of the use or existence of the product.

A team should gather information for each of these categories from a diverse set of qualitative and quantitative sources such as logs, database queries, interviews, and surveys. Ideally, there should be a balance of negative and positive feedback among the results in order to fairly verify areas of both success and failure. This also forces a team to find new measurements as the current set becomes homogeneous.

The target phase serves as a normalized reference point for tracking progress between cycles. Teams select at least one target for each measurement category – value, friction, cost, and side-effects. They ensure no target is equal to or derived from a metric commonly collected in the measure phase. Chosen targets are fundamental in nature (e.g., profit, user count, market price, product weight). The rules described help adhere to Goodhart’s Law which states: “When a measure becomes a target, it ceases to be a good measure.” Targets can persist through multiple iterations and update whenever they are reached.

Explore exists to formulate enhancements to the product that help hit targets. This part of the cycle fosters all forms of research, such as running simulations, conducting follow-up interviews, creating accessibility labs, and studying white papers. Exploration can take however long a team and client determines is realistic or necessary given the parameters of the project, but typically lasts at least one week.

Team members commit their ideas in the deploy phase. In other words, they translate their primary and secondary research into tangible additions to the product. However, the team performs a critical test cycle before shipping.

Human-centered design emphasizes the need to test a hypothesis before releasing a fully formed product and AI solutions are no different. - Randy Duke, Cantina Experience Strategist

The team completes at least one iteration of the four stages within a sandbox environment before moving to production. A sandbox environment is a physical or virtual space where the product can safely fail or generate adverse side-effects. Creating such a test environment is often incredibly challenging, and perhaps dangerous if done improperly, especially for deliverables involving human interaction or personal data. Logically, it continues to be a high-priority research topic for us.

Our baseline approach is a starting point for any product, service, or system. It is easily adapted to the core technology at hand by a two-step procedure. First, identify the unique obstacles of the central technology, then declare them primary explore and test topics.

Specific Considerations

The following are the particular challenges AI poses to a team:

- Task Evasion

- Negligent Optimization

- Innate Obfuscation

Task evasion occurs when an AI exploits behavior that both satisfies internal criteria and defies the intention of its creator. For example, GenProg is a self-debugging program that was caught removing entire features as a means to pass validation without resolving specific defects.

Negligent optimization describes the pursuing of a goal by an AI despite collateral damage. Many have heard of Amazon’s sexist recruiting software, where the AI makes defectively subjective decisions without understanding or caring for the ramifications. To clarify, scenarios like Amazon’s can also be considered subtle task evasion. This is because the subjective behavior is usually not immediately obvious and caused by an accidental incentive structure.

Innate obfuscation is the loss or hiding of information within the natural complexity of AI algorithms and structures. Doctors struggle to trust the diagnosis of IBM’s Watson because the software is unable to provide the reasoning behind its decision. “AI’s decision-making process is usually too difficult for most people to understand,” says Vyacheslav Polonski, Ph.D., UX researcher for Google. He continues, “And interacting with something we don’t understand can cause anxiety and make us feel like we’re losing control.”

A team dedicates time during the explore phase to become familiar with each trait, how it can manifest, and techniques for combating it. In addition, the test cycle within the deploy stage should verbosely check for each of the three issues. It is not reasonable for a team to solve all of them, but being aware enough to try gives the team a distinct advantage.

Summary

AI solutions and their respective problems can be classified as use or systemic. Cantina assists in use efforts but most of our experience is in creating systemic solutions. The methodology we propose is based on existing strategies and principles augmented by specific consideration for AI.

A product, service, or system team gains insight through measurement, selects target goals that are difficult to game, explores potential enhancements with targets and metrics in mind, and finally, integrates and tests their findings before deploying to production.

Explore and test phases provide an opportunity to address the three unique problems AI presents – task evasion, negligent optimization, and innate obfuscation.

Future Work

Over the next year, our team will refine our method and elaborate on each of the topics discussed above. Internal collaboration is driving thought leadership on topics like ethics, accessibility, security and privacy, and product strategy as they pertain to AI. Lastly, our engineers are deepening their technical capability in areas like machine learning, cloud services, and environment simulation.

We look forward to working with everyone to commence a new decade of innovation. Reach out and let us know how we can help you.