Today’s voice UI has come a long way, but can go much further. Take a look at this user study conducted by the Nielsen Norman Group on the usability of voice assistants. In summary, the group asked participants to complete a series of tasks using Alexa, Google Assistant, or Siri, then followed up with an interview. The group concluded the voice assistants had “an overall usability level that’s close to useless for even slightly complex interactions”. The study describes an inherent learning curve to using voice assistants that “goes against the basic premise of human-centered design.”

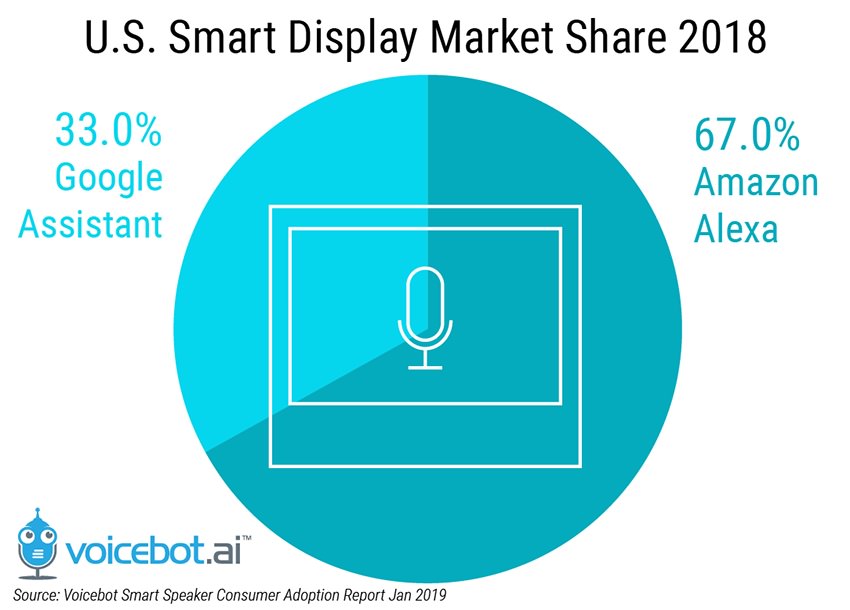

In our view, the problem is partially the fault of flawed development tools. We have identified a number of such issues through our research at Cantina. To narrow this article’s focus, we’ve compiled critiques of Actions on Google, Dialogflow, and Amazon’s Alexa Skills platforms. There is an ever-growing list of relevant tools that we could have examined, but the Google and Amazon platforms are the dominant figures in the industry at present.

Dialogflow is a high-level human-computer conversation service by Google. Actions on Google is firmly coupled with Dialogflow, however Dialogflow can be used without creating an Action.

Name Pronunciation & Understanding

Telling your voice assistant to “Text Mom” will probably work every time. But what if you want to email Kole at Cantina? A voice assistant will almost certainly try to email Cole at Cantina. Try inviting Marc Gajdosik to your meeting. The results will likely be unpredictable.

Names are a challenge for even humans to pronounce and spell. But humans ask for help, and we do better the next time we need to pronounce a tricky name. Neither Dialogflow nor the Alexa platform provides tools for training a model to pronounce, spell, or understand unique names. Our Cantina research team conjured up a workaround by adding the assistant’s butchered interpretation of a name as an Entity synonym. In other words, the incorrect spelling would act as a nickname. However, this workaround only succeeds if the model consistently fails in the same set of ways. In other words, if the model’s interpretation is unpredictable, then synonym mapping will frequently fail.

In the past, we could point to fundamental limitations of natural language technology, but the algorithms of today have proven themselves more than capable. Our model can’t be taught a new name simply because such control has been abstracted away by the platform. Google is able to better alleviate this issue by having direct access to lower level components. There exist phonetic spelling fields in Google Contacts that can help the assistant understand and pronounce names.

We hope Google opens this capability to developers soon, because name accuracy is critical to voice UI usability.

Deployment

Our team deployed a beta version of our Alexa Skill to Cantina employees with ease. A developer was able to get the app running on an Echo Dot within minutes, just in time for a scheduled demo. Deploying the Google Assistant Action demo, however, was a different story.

First, our team was unable to test the action outside the Dialogflow console (e.g. in the GA simulator, mobile devices, or assistant hardware) due to restricted activity controls at the administrative level of our domain. Testers had to sign up with their personal google accounts to circumvent this problem. Second, our app was rejected for having a name containing “assistant”, which we received no prior warning for during the project creation process. Finally, adding an app to our Google Home Mini was no trivial feat. The only instructions we could find laid out the following steps:

- Open the Google Home app.

- Tap Account.

- Tap Settings.

- Tap Services.

- Tap Explore.

- Select the application.

Unfortunately, these steps did not work. Eventually, we discovered the following process:

- Whitelist an alpha tester’s email via the Actions on Google Console (Release -> Alpha -> Manage Alpha Testers).

- Wait a few hours.

- Send an opt-in link to a registered alpha tester via the Actions on Google Console (Release -> Alpha -> Manage Alpha Testers).

- Open this link on a smartphone that contains the Google Home app. Make sure you are signed into the app with the whitelisted email; otherwise, it will display a blank screen.

- In the Google Home app, link the Google Mini to your Google Account.

- Invoke the alpha application on your Mini by stating, “Hey Google, talk to [app name]”.

It’s possible that steps 3 and 4 are superfluous, but without proper documentation to say otherwise, it is worth being diligent. As it turned out, the correct process for loading an alpha app onto the Google Home was not unreasonable, but discovering the steps was non-intuitive.

Ease of deployment is important to ensuring voice applications are rigorously tested by alpha and beta users. Additionally, unreliable deployment can put unnecessary pressure on developers and their project schedules.

Environments & Peripherals

We made the choice to develop our Dialogflow fulfillment code in Typescript. Unfortunately, at the time of writing, Dialogflow had rigid and outdated Typescript definitions. This slightly undermined our decision to use the language over JavaScript. Additionally, we used Google Cloud Functions as our fulfillment endpoint, which was only stable on Node 6. Thus, our code could not leverage ES2017+ capabilities.

On the Alexa side, our team used AWS Lambda as the fulfillment endpoint. Overall, our developers found working with Lambda slightly more crude than Google Cloud Functions, but certainly capable. There was one small challenge with deploying a local node module alongside our Lambda functions—something that was simple on Google’s service.

These critiques fall slightly out of this article’s scope. They’re still worth mentioning, however, considering Dialogflow and the Alexa platform officially recommend these services for voice-enabled applications.

CLI

Our Alexa app was built using the ask-cli library for Node. This library allowed our developers to construct the conversation model with JSON notation and deploy directly from the command line. This was a much more scalable way of developing as opposed to using the GUI console. Describing our models within JSON files allowed us to include them in our Git repository, avoid navigating a cumbersome GUI as the number and complexity of intents increased, and generate pieces of model programmatically.

Learning how to develop from the CLI took longer than necessary. We attribute this partially to lackluster documentation. There were scarce examples of model formatting, forcing us to first construct our models in the GUI console, then examine the JSON output.

Time Formatting

Our team built a cross-platform voice assistant app to help Cantina employees schedule meetings. Naturally, most of the app logic depends on time data. If a user says their meeting “starts tomorrow at noon,” Google’s Dialogflow system includes a timestamp like 2019-03-26T12:00:00+00:00 in the request to our app. The timestamp provides our code with the information it needs, with additional temporal context, in a manageable standard format. If the same user asks Alexa, our app will receive 12:00. This is because Alexa separates time and date into two different slot types, giving our cross-platform fulfillment code an additional asymmetric condition to handle.

Alexa’s durations slots use the ISO-8601 duration format. To express “one day four hours fifteen minutes”, Alexa will return P1DT4H15M. Although easy enough to extrapolate the information, this format seems cumbersome and creates seemingly unnecessary issues, forcing the developer to create a method to account for this novelty. We think using milliseconds, for example, would be much more efficient.

Overall, these are not insurmountable hurdles, but hurdles nonetheless, on the path to better voice UI. Small things like these take time, wear on developer morale, and most importantly, make developing a true end-to-end pipeline for voice apps more complicated.

It is easy to dismiss nit-picks as not important, but a slight annoyance or limitation can lead engineers to shortcuts, “hacks,” compromises, and confusion on a higher level.

Documentation

Both platforms provide verbose documentation that is more than sufficient for simple projects and mostly comprehensive for complex projects. We do believe the documentation for both platforms could be improved. The documentation for the Google Assistant and Dialogflow was easy on the eyes compared to the Alexa platform documentation. That said, some important sections for Dialogflow were outdated at the time of our development. Certain API specification pages for the Alexa platform were unreasonably long, making it difficult to cross reference and find needed sections. Documentation continues to be a pain point as we enter the future, and will probably always be.

Integrations

Dialogflow can directly integrate into a suite of third-party platforms, including Facebook Messenger, Slack, and Twitter. There were still hiccups in the integration process, however. Like some parts of Dialogflow’s documentation, integration features grew outdated. For example, Slack updated their bot API events, while Dialogflow at the time of this writing was listening for older API events. This meant our users could only direct message our bot, which works great, but we also wanted to interact with the bot inside channels.

Slack is just one of many integrations Dialogflow offers, so it is understandable that keeping everything updated takes time. Overall, we were happy the platform had these capabilities, but brings another layer of nuance to developing voice applications.

Developer Consoles

The Dialogflow developer console sports material design, and is built with beginner and non-technical users in mind. Our development team thought it made building simple voice applications very easy. Heavier usage, though, revealed unpolished parts of the console to us. For example, the “add follow-up intent” feature felt like a needless shortcut for configuring intent context directly. There were also a number of confusing alerts, saying things like “It looks like you do not have other agents to move or copy intents there.” Furthermore, we thought the entire tree-based system for intent handling made mapping complex conversations extremely frustrating. We were forced to duplicate intents as the conversation tree progressed, making our simple context flows a lot more cumbersome than need be.

Conclusion

We examined two dominant platforms in the voice UI space, and found them to be flawed in a number of ways. From our perspective, even the smallest weakness in a development tool can have a butterfly effect on the end product’s usability. Furthermore, as other firms beside ours have demonstrated, voice UI needs all the help it can get to improve usability. Our team thinks addressing small and concrete issues, like those discussed above, is a realistic approach for cutting down the broader problem.

Voice UI has come a long way, and together, we will take it much further.