The Golden Promise

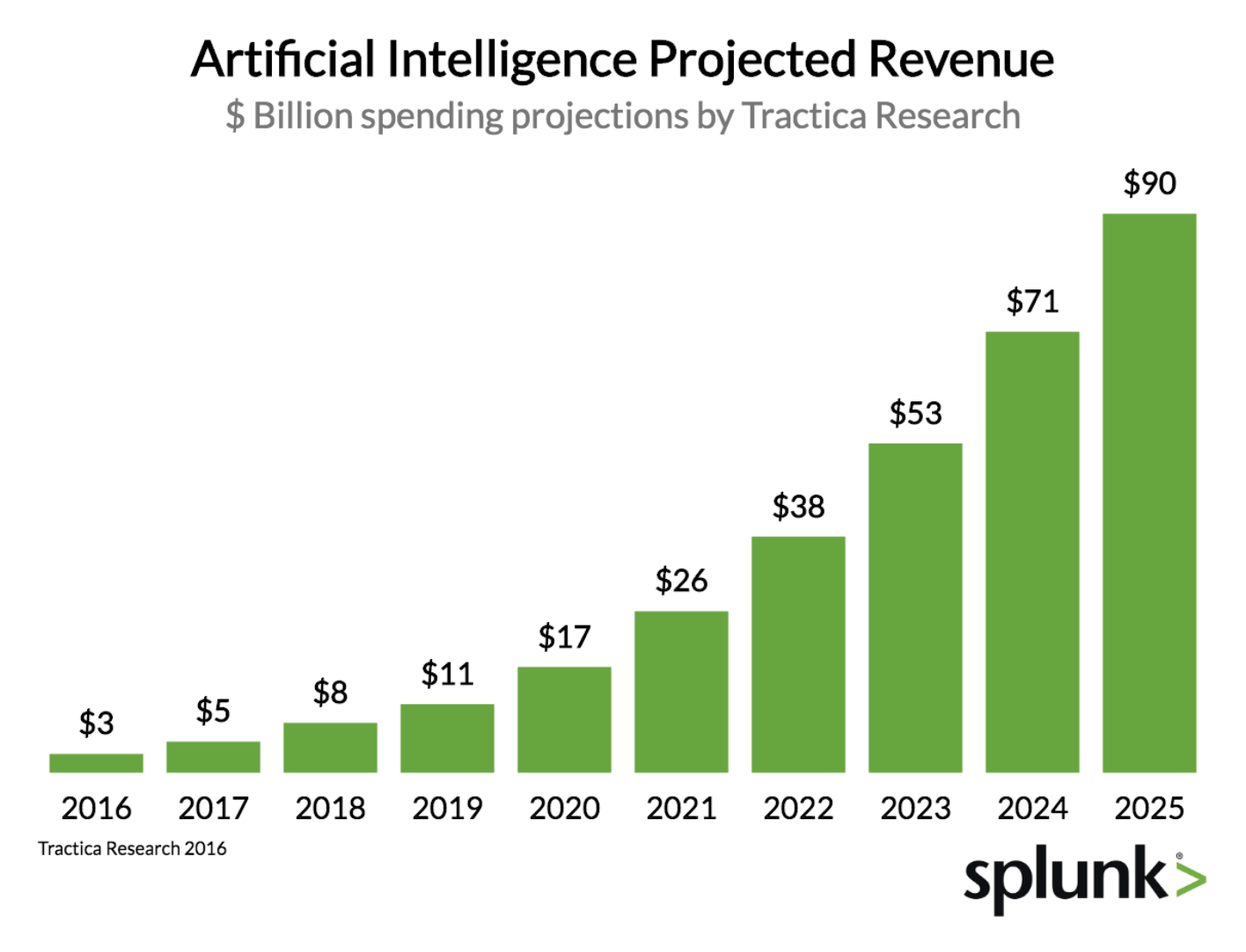

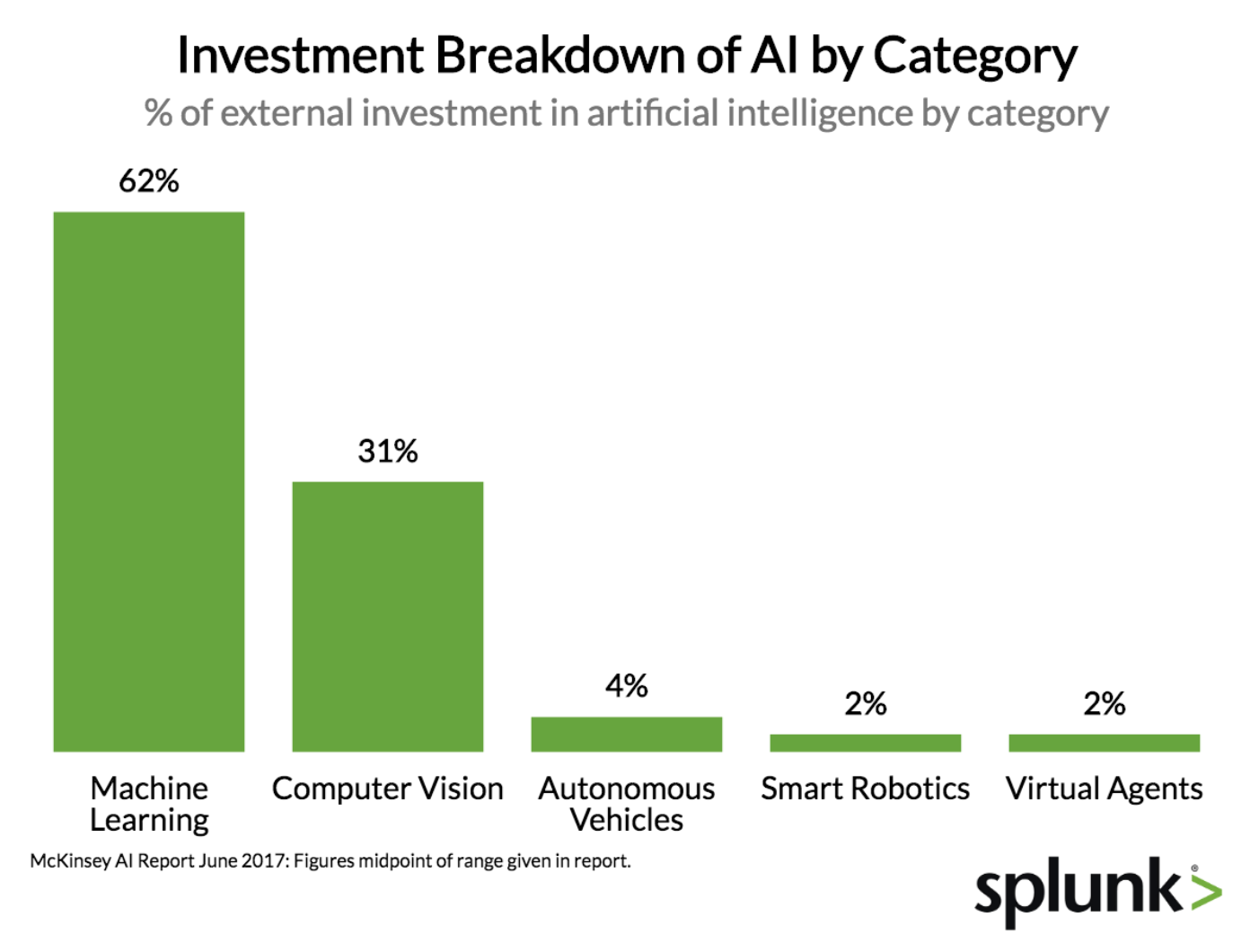

With startups, enterprises, and governments all pouring funds into artificial intelligence, the technology is poised to explode in the coming years. Tech and non-tech companies alike are racing to get ahead of competitors and tap into its potential, with corporate giants leading the way. AI seems to have it all: from the sci-fi-esque imagery to the big-name recognition to the enormous, universal market potential. With such a golden promise, it’s little wonder that so many companies are on board the AI train. But with so much hype comes a lot of noise—how can we be sure that the current wave of hype isn’t merely a passing fad? To help answer this question, let’s explore AI’s strengths and weaknesses.

A Suitcase Term

Marvin Minsky, a legendary pioneer in AI research, once characterized “artificial intelligence” as a “suitcase term.” It’s an apt expression: the expression carries far and wide, but so many applications and technologies are considered “artificial intelligence” that it is difficult to reduce it to a single use case. It’s easy to buy into an “AI product” that does not really make use of contemporary ML or Deep Learning.

Case in point: Cantina recently attended this year’s AI World Expo, an exhibition marketed as “industry’s largest independent AI business event,” with over 50 companies showcasing their services. The majority of them branded themselves as general “Artificial Intelligence providers,” but while we found innovative ML-based ideas — a company using satellite imagery to identify and analyze shipping routes, for instance — we quickly realized that many of these companies were only nominally invested in AI-based tools.

The issue wasn’t that these companies hadn’t developed machine learning tools, but that they were treating these tools as though they were end-products. For many companies, this misstep took the form of an “insights engine” that offered automated suggestions of vague or dubious origin. Some took it quite far: one analytics company, for instance, promised “decisions from an Artificial Intelligence platform that understands you.” Though we are past the days of Clippy, ML-based virtual assistants are certainly not going to “understand” you as a human would. Nevertheless, many companies implement AI tools outside their effective scope, resulting in brittle, ungrounded products.

Catastrophic Forgetfulness

This issue extends beyond small start-ups. Take the Amazon recruiting tool that was scrapped when it was discovered to carry a bias against female candidates. Or the NYU professor who convinced his ML software that a stop sign was merely a speed limit sign by sticking a post-it note on it. It’s easy to pack extra baggage into your AI suitcase, but make no mistake: AI is a tool, not an end-service.

Let’s unpack what that means. Like all tools, contemporary AI is directed toward narrow problems. “Narrow” is not synonymous with “small”: AI tools have already provided answers for once-impossible questions in natural language processing or image recognition. But AI, like all other programming tools, is unable to understand the questions it answers.

Give a ML algorithm a static context, like a series of Go board-states, an imageset of human faces, or petabytes of driving data, and it is capable of outputting a meaningful answer. But with an incomplete or ambiguous context, the bias it carries over from one subcontext (say, the shape of an eye, gleaned from a series of portraits) may amplify the importance of weaker, less relevant patterns in another (the shape of a skateboard wheel, taken from a collection of action shots). The resulting output may be improbable (a skateboard-face) or worse: believable but ultimately false.

This underlying issue is known as “catastrophic forgetfulness,” or AI’s inability to preserve a pattern across differing contexts. For us, forgetting is not exactly a conscious decision but what we forget is normally a reflection of what we value. The jury is out on where we derive these values, but contemporary AI merely values the patterns it detects most clearly and most often. AI has no sense of ‘bad data’ that should be ignored outright; it simply gobbles up everything put before it.

Machine Oracles

Unfortunately, there are already worrying deferrals to AI-produced output as though it were as a sort of ‘machine oracle,’ such as the AI used to help judge criminals. Granting AI the authority to evaluate our lives is a gross oversight of its own limitations. It does not ‘choose’ to remember or forget anything itself; it cannot value the data it ingests.

If we want to invest in AI, then, we need humans—data scientists, statisticians, industry specialists—capable of defining its context, or, in other words, ‘asking’ the AI the right questions. Their job is akin to a translator’s; whether they are manipulating the AI’s biases directly or deciding what data is relevant, they are carrying the context of a nuanced, real-world problem (e.g. “How can we create a fairer, more efficient hiring process?”) into a binary, virtual world. There is no perfect translation between these two environments; all the same, a translator who understands the subtleties of both worlds will produce a much more meaningful output than one who provides too much, too little, or the wrong sort of context. As a rule of thumb, if the context a ML tool is operating within cannot be adequately explained by its creators, there are likely deeper issues with its application.

Data Capital

Historians and analysts alike have employed the term “Information Age” since at least the 1980s to touch on the growing dominance of data over our economy. Large corporations have already made their successes off of developing and controlling data, such as Walmart’s heavy adoption of databases in the 80s. And now, with tech giants growing their data teams exponentially and corporate leaders like IBM’s CEO, Ginny Rometty, describing data as “the world’s next great natural resource,” it’s a guarantee that the highest quantity of ‘data capital’ rests with the movers and shakers. That’s not nearly as constricting for potential AI prospectors as it sounds, not only because movers and shakers are willing to cooperate, but because data is not nearly as fixed a resource as its physical counterparts. With some ingenuity, we can discover the value of previously unexplored or unrealized data models.

But first, what is data capital? Though it does correlate with the sheer volume of data these corporations have access to, it also connotes a specialization of that data. For instance, Google may have a whole lot of search engine data to work with, but they do not share the specialized telemetry data that a giant like Monsanto or GM collect about the markets they operate in. Though Google are spending a good deal more on AI development than those traditional companies, they do not have the ‘data capital’ to compete effectively in those sectors. If you’re looking for a deeper dive into the importance of data capital, I recommend this article by Benedict Evans.

This is exactly why it’s so crucial to include industry subject matter experts in discussions for how to apply AI technologies in support of solving real customer problems: identifying the right issues to tackle should lead to deciding on the best technology and data samples to use, rather than letting the tech define the limits of the problems you need to address. It’s not difficult to find engineers capable of writing neural network algorithms; what’s much more valuable are the individuals able to design and capture robust, flexible, and meaningful datasets for a ML tool to capitalize upon.

Wrapping Up

The speed in which product and service companies are embracing AI will certainly change our world. However, contemporary artificial intelligence is not a one-stop solution to satisfy all flavors of customer and business problems, though we’re at a stage where many service firms are marketing themselves as such. It is vital to strategize about human-centered applications to drive the application of AI technologies, rather than the other way around.

At MIT’s AI and the Future of Work Congress this year, MIT professor Erik Brynjolfsson capped his talk with a reflection on AI’s enormous potential. He likened artificial intelligence to a hammer, adding that it’s up to us to decide how we want to wield it. “Wield” is the operative word here: it’s not enough to offer an AI service for others to make sense of. In the midst of this AI gold rush, we have to push beyond selling pickaxes. Success lies, as ever, not merely in the tools we wield but in how precisely we choose to use them.