A few years ago, the TensorFlow project appeared and opened up a whole new range of machine learning capabilities. An open-source platform, with a host of tools for training and deploying machine learning models, TensorFlow has been one of the driving forces behind the rapid adoption of ML. It is a great tool for experimentation and since its initial release, TensorFlow has been ported to the browser, and integrated into mobile devices. Hardware designed and dedicated for machine learning, such as Google’s Coral boards, has been bringing TensorFlow and other ML systems to the edge; that is, smaller, cheaper devices meant to be deployed out in the world, rather than big servers living in the data centers that back the Internet of Things and the cloud.

But things are about to get even more interesting, because TensorFlow is starting to show up on cheap, low-power microcontrollers. This is big news in a small package.

The Internet of Things has long been held out as a promising innovation that would change the world. In the first pass at IoT, driven by RFID, we tried to provide a way for machines to gain more understanding of the world around them by attaching metadata to everything in site. This vision of an Internet of Things has not entirely disappeared, but it is no longer the primary way we conceive of a smart, connected world.

We also had the industrial M2M concept, where machines were connected, forming networks of devices that could coordinate, cooperate, and monitor. These networks are often local and focused on specific manufacturing and warehousing needs. M2M remains a valuable tool, and, in recent years, has been expanded into the concept of the Industrial Internet of Things, or IIoT.

The next version of IoT presented us with the concept of smart devices, connected to the infrastructure of the Internet. This vision combined some of the earlier ideas, bringing in the notion of metadata and machine-to-machine connection, but relying heavily on the Internet as the primary network. This version of smart things is connected, able to sense and act on the physical world. Many of the early devices in this version of IoT were built on relatively inexpensive, low-power microcontrollers, tiny computing devices with meager capabilities compared to their beefier cousins that inhabit our laptops and desktops. And because of the limited resources in these systems, the amount of smarts that could be deployed at the edge was limited. To do really smart stuff, and still be able to scale, IoT devices had to rely on the cloud, sending data up to servers to be processed and receiving commands to act for any complex situation. One way to look at this is that the edge was tightly tied to the center.

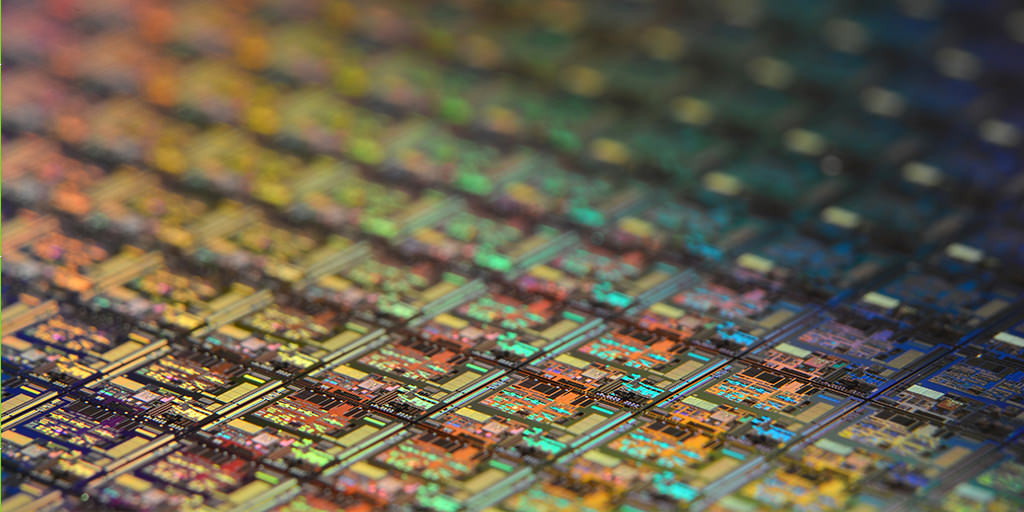

But microcontrollers are computers, and, like all computers, they get to take advantage of the scaling of computing capability. In 1965, Gordon Moore observed that the number of transistors possible in a given area could double every two years or so.1 This steady increase in density drove the creation of more powerful computers for the past 60 years, while also allowing us to reduce the size of computers significantly. We have also seen other techniques that have improved the power of all computers, such as multiple cores. And even the smallest computers have benefited, including microcontrollers.

And that brings us back to where we started. The increase in computing power for small, relatively low-power devices means that IoT has matured with more capability than we thought was possible. Just a couple of years ago, it would have been hard to imagine devices capable of complex tasks like inferencing audio or video data locally via machine learning. Today, it’s not only doable, but it’s also cheap and available.

In the past, your best bet for doing this type of ML work at the edge would have required a still relatively expensive system, such as the purpose-built Nvidia Jetson boards and Google Coral accelerators. Such systems are designed to handle machine learning tasks. They are often designed along with the same fast-data-crunching principles as the GPU boards that power the sophisticated graphics in modern video games and VR displays.

But it turns out, at least for some tasks, we can now do that work using more-or-less commodity microcontrollers, thanks to software like TensorFlow Lite for Microcontrollers. The implications of this means big things in small packages.

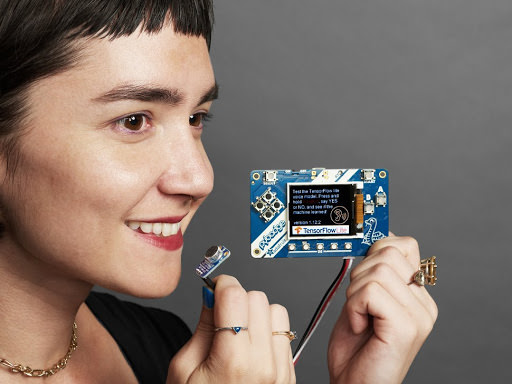

For example, Adafruit, a well-regarded creator of maker-friendly electronics founded by Limor Fried, offers a kit for TFLfM for a mere $45 dollars. This kit uses an ARM Cortex M4 microcontroller to power it. It also has 192 kilobytes of RAM and runs at 120 MHz. For comparison, that’s a fraction of the processor in the original iPhone. And yet, running TensorFlow Lite, that little microcontroller can process a basic ML model to inference incoming audio data. In other words, it can do voice recognition tasks without having to reach back up to the cloud. Wow.

And this is just the beginning.

Being able to inference a machine learning model, to gain insight on the edge, has vast implications for IoT. For example, it’s now feasible to handle tasks like natural language processing locally. This means that not only can more devices be able to handle verbal commands, but they can also do it in a way that is more secure and private. Instead of having to send your entire spoken conversation to the cloud, which exposes it to an unauthorized party or stores it long after you have uttered the words, a system at the edge can infer your meaning and only has to send back the intent. This also means that the amount of data on the wire can be drastically reduced.

Smarter, ML-capable microcontrollers are also able to handle one of the trickier problems of IoT: what to do when an edge device becomes disconnected from the service that backs it, or the Internet as a whole. Because more of the capability is inherent to the device, edge computing devices will operate autonomously from the Internet when needed. This means more resilient services are possible and reassures end users that these systems will operate the way they want. It also might provide a solution for one of the things I have written about before, namely that an IoT device is really a physical service endpoint of a service. If that service goes away, the hardware itself becomes useless. With local smarts, a device may be able to continue to provide functionality even when it can’t phone home (and if “home” ceases to exist).

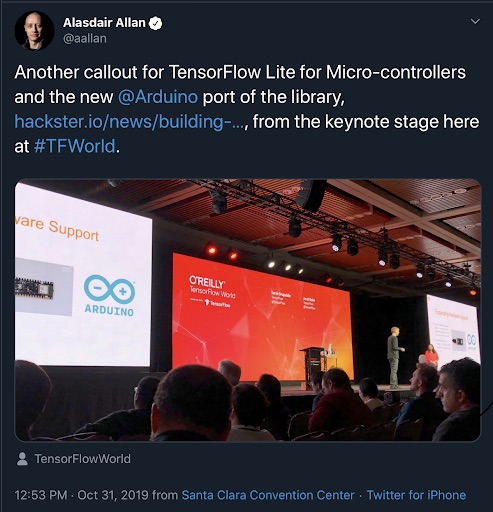

Alasdair Allan has been tracking the performance and capabilities of machine learning on the edge for the past year or so. He also recently wrote about the port of TensorFlow Lite to the Arduino framework, a hacker/maker-friendly framework for developing on microcontrollers. It made what was once a pretty esoteric field that required specialized programming skills more accessible to everyone.2 Other microcontroller development environments, such as CircuitPython, are likely to follow suit. It is safe to say that we can expect an explosion of edge computing for IoT in the near future. And with it, another chance to fulfill the promise of a world filled with smart, connected devices.

Interested in learning more about how Cantina works in the fields of Machine Learning and IoT/IIoT? We’d love to hear from you.

-

It should be noted that Gordon Moore’s observation, which has since come to be known as Moore’s Law, is not really about computing power per se. There are a lot of factors that go into the increasing capabilities of computing devices. The last few years have seen roadblocks – many of the related to physical limits – that have slowed and perhaps stopped the increase. Other techniques, such as multithreaded computing across multiple cores, have been more responsible for the recent increases in capability. But it seems likely that we will need new classes of hardware in the near future if we want to see these capabilities increased. ↩

-

Arduino, which began as tightly coupled marriage between hardware and software, has blossomed to support an ecosystem with hundreds of microcontroller development boards across dozens of hardware architectures. It has lowered the bar on experimenting with and producing products that use microcontrollers. We have used Arduino is some of our hardware and IoT prototyping projects. In addition, it’s straightforward to use and has accelerated rapid prototyping development. ↩